Introduction

Compute Express Link® (CXL®) is an interconnect architected for memory expansion, heterogenous compute, and the disaggregation of system resources. Disaggregation with CXL provides efficient resource sharing and pooling while maintaining low latency and high bandwidth with cache-coherent load-store semantics. With the anticipation of CXL becoming ubiquitous in the data centers of tomorrow, the CXL Consortium has built additional frameworks to address the ever-increasing demand for memory and service the rapidly rising number of cores in CPUs.

The concepts of switching, memory pooling, and fabric management were included with the release of the CXL 2.0 specification in November 2020 which provides a standard for fully composable and disaggregated memory infrastructures. These new pooled memory architectures allow for memory to be allocated and de-allocated from any given compute instance on an as-needed basis, increasing memory utilization and the amount of memory available to any given compute instance while decreasing the overall total cost of ownership.

The CXL Fabric Manager Application Programming Interface (FM API) meets the management demands of such composable systems.

What is the CXL Fabric Manager?

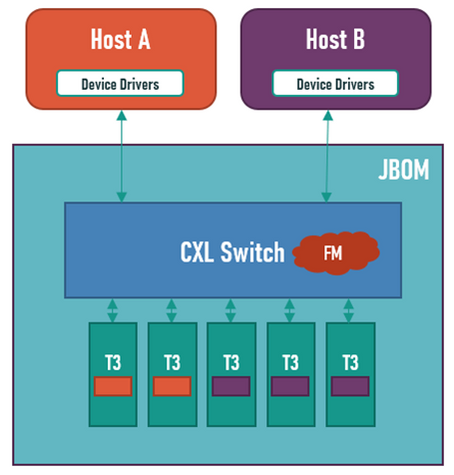

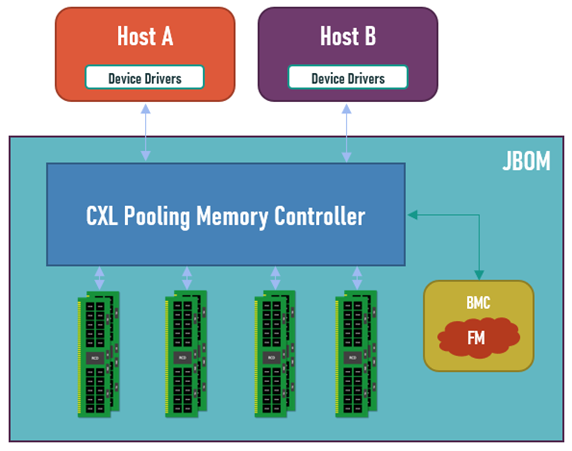

Figure 1a

How is it implemented?

The FM manages devices such as SLDs, Multiple Logical Device (MLDs), switches, and pooling memory controllers by programming the Component Command Interface (CCI). CCIs physically reside directly on components either as a PCI Express® (PCIe®) Memory Mapped Input/Output (MMIO) space or as a Management Component Transport Protocol (MCTP) endpoint (EP) where commands can be sent, status reported, and responses can be read. Additionally, with MCTP EP support any transport that has an existing MCTP binding specification, such as SMBus/I2C, PCIe, USB or serial links can be supported with discovery by the FM using MCTP specification defined discovery. The FM queries the component’s capabilities by accessing the devices “Command Effects Log” via the CCI.

Command opcodes are a simple 2-Byte structure, 1 Byte is the command set and 1 Byte is the command. Although the interface has no queue, the CCI supports background operations (BOs) to allow the FM to have multiple operations running simultaneously across multiple CCIs. When the FM launches a time-consuming management BO, the component immediately responds that a BO has begun. The component will provide percent-complete status updates every 2 seconds until sending its final complete status. Optionally, if the FM enabled the CCI interrupt mechanism, the component could send an interrupt to the FM indicating completion. The FM may follow up with a status check to verify the complete status of the BO.

Practical FM example: MLD Management

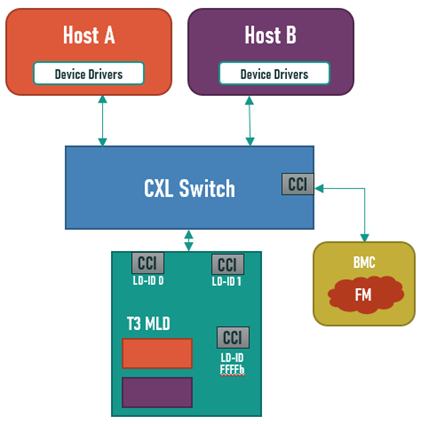

Figure 2

Referring to Figure 2, the following is an example of a provisioning sequence:

-

At initialization, the FM detects the switch’s CCI

-

Via the CCI, the FM discovers the device is a switch and that the switch also contains an MLD port

-

The FM queries the MLD FM LD to identify the MLD’s capabilities

-

The FM binds LD-ID0 to host A

-

The FM binds LD-ID1 to host B

-

Host CPUs boot, discover the memory bound to their VHs

After the sequence is completed, the memory provisioned from the JBOM can be treated like any other memory range in their system. Despite there only being a single physical link between the switch and the MLD, each Host can independently operate on their bound memory ranges as dedicated memory space.

Summary

The CXL FM framework enabled by CXL 2.0 brings fully composable and disaggregated memory to next-generation data centers and server architectures. The concepts of switching, memory pooling, and fabrics enable memory bandwidth and capacity to be bound and unbound to compute resources in a workload-dependent manner.

This composability will decrease total cost of ownership by increasing memory utilization and decreasing the total memory footprint required by the cluster. The FM is the application logic run as part of the data centers management infrastructure to construct and dismantle servers and their composable resources.

The CXL 3.0 specification will have improvements for even better scalability and resource utilization. Enhanced memory pooling, multi-level switching with fabric capabilities and new enhanced coherency support, while still maintaining full backward compatibility with CXL 2.0, CXL 1.1, and CXL 1.0.

For more detailed information and discussion on the CXL FM please take a look at the Fabric Manager Webinar and Q&A hosted by Vincent Hache, Director of Systems Architecture, Rambus.

For more information on CXL technology, visit our Resource Library for white papers, presentations, and webinars.

Interested in contributing?

Join the CXL Consortium to participate in the technical working groups and influence the direction of the CXL specification. Work on the CXL 3.0 specification is already underway! Learn more about the CXL Consortium membership here.

Compute Express Link® and CXL® Consortium are trademarks of the Compute Express Link Consortium.