Author: Grant Mackey, CTO Jackrabbit Labs

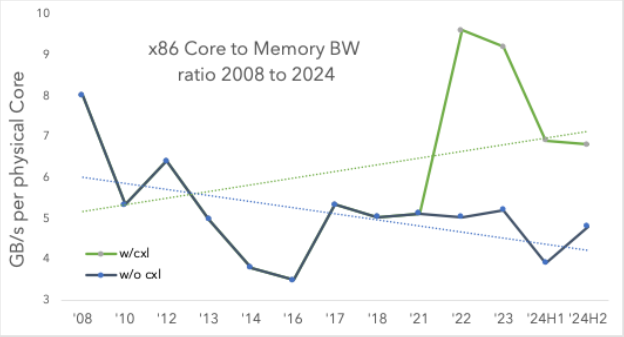

Core stranding1 is real and getting worse with each generation of x86 server CPU released. Shown in the figure below, Compute Express Link® (CXL®) can provide significant amounts of additional memory bandwidth per core, but with higher latencies than local DDR. However, we at Jackrabbit Labs argue that there is a wealth of mature, workhorse server workloads in the datacenter today where the impact of CXL latencies to application performance2 ranges from ‘don’t care’ to ‘barely noticeable.’ These workloads just need some memory somewhere, not necessarily local, high-performance DDR.

As a very important bonus, when these latency-tolerant workloads are consuming CXL memory, local DRAM is freed up for the most demanding and performant workloads that require maximum performance. What does this mean for the end consumer? Possibly less DDR5 deployed in a server, possibly less additional server(s) to purchase. Either way, the infrastructure efficiencies of CXL-attached memory may positively impact your infrastructure budget.

A first step to CXL in the datacenter

Jackrabbit Labs worked with OCP partners Uber and SK hynix3 on a project for the 2024 OCP Global Summit. Our goal was to have Kubernetes use CXL pooled memory without any modifications to Kubernetes or the end user pods, then see how workloads ran on the composable CXL memory regions. Uber provided the customer use case and workload4, SK hynix volunteered their Niagara CXL MH-MLD and time, and we (JRL) wrote a middleware layer we call OFMA (Open Fabric Management Agent), to act as the bridge between the hardware and end user workloads.

At the end of the collaboration, we demonstrated that not only is this solution possible and robust, but the target workloads had zero issues running on CXL memory that was literally off server in a CXL pool. Just prior to and definitely after OCP ‘24, we have seen white papers and academic publications in support of our conclusion: that many applications just need memory, but they don’t generally mind where it is5.

Can you do it for real now?

Absolutely, we see real value today for a wide range of commonplace applications and workloads. Jackrabbit Labs is a software and services company focused on enabling new and emerging memory fabrics like CXL. A primary technical focus of our work is ensuring that we understand the quirks and hangups of fabric and endpoint devices so we can better assist end users interested in CXL and CXL fabrics. Based on our practical experience, which entails porting our software across a range of devices and servers, there’s no reason to wait for CXL 3.X hardware to exist. OFMA works with the CXL hardware available today (i.e., CXL 1.1 and 2.0) and its capabilities will only get richer as CXL 3.X hardware emerges.

Sounds great! How do we grow adoption and the ecosystem?

A primary barrier for adoption is that CXL is ‘new’ and the referenced workloads are pretty mature, meaning that:

a) These identified applications are not natively NUMA-aware because the need did not exist when they were created;

b) they are most likely providing essential services; and

c) the customer is not amenable to a rewrite/modification of a reliable and familiar application6.

So, if the application needs modification to take advantage of the new hardware itself, but the consumer isn’t willing to modify their application, how do we proceed? You write software (middleware) to bridge the gap.

Jackrabbit Labs has demonstrated that its OFMA can seamlessly integrate Kubernetes and CXL memory devices7. The demonstration component is perhaps satisfied, but the impact is not yet felt in industry. Going forward, being a little louder about CXL adoption is key. Meaning, CXL industry participants need to continue providing real examples of improvement and success resulting from CXL technology deployments as frequently as possible.

Takeaways

CXL has value today, not in 202x-30. Jackrabbit Labs and others have shown the why, how, and value of memory pooling in CXL 1.1 and 2.0 hardware. The real demonstrations of full stack integration with resource schedulers like k8s and CXL devices, without modification of end-user workflows and applications, is a big step towards maturity of the technology.

However, the software ecosystem for CXL still remains nascent. Hardware vendors, system integrators, and kernel developers are focused on delivering world-class, robust, compliant hardware and firmware to market. Now we need more software and ecosystem development from these same large entities.

We encourage you to check out the efforts of the CXL Consortium as well as the OCP CMS working group, as these two organizations are committing the most effort and impact on the ecosystem. If you’re interested in learning what deploying CXL technology-based solutions can do for your infrastructure, contact us. We’d love to hear from you.

Footnotes

1. Or memory, take your pick. Either way you look at it, you’ve bought resources you can’t take advantage of.

2. Think web workloads, large data processing like Spark/Pandas, TPC-H, KV stores, etc.

3. Also a CXL Consortium member

4. Kubernetes, Go, and Java workloads

5. Meaning, latency needs to be closer to nanoseconds than micro and the workload can treat it like dram unconditionally.

6. “I have to assume this new thing will somehow make my life harder.” – Every IT infrastructure person ever. Datacenter downtime costs money, too much downtime of a new infrastructure component can easily erase any savings it provided in the first place.

7. And others! E.g. Intel Finland and Samsung have presented or spoken about similar middleware for CXL resource orchestration