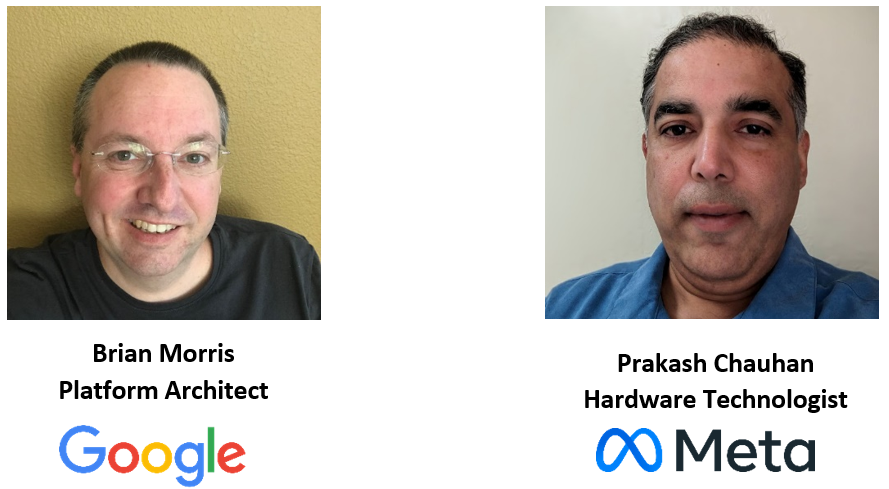

The CXL® Consortium recently hosted a webinar exploring how CXL type-3 devices can support a new tier of memory, allowing for the expansion of platform memory capacity incrementally and cost-effectively to enable AI & HPC workloads. During the webinar, Brian Morris, Google, and Prakash Chauhan, Meta, shared an overview of the applications and use cases for CXL memory expansion, showcasing how CXL can drive memory architectures of the future.

Speakers

If you were not able to attend the live webinar, the recording is available via YouTube and the webinar presentation slides are available for download on the CXL Consortium Website. We received great questions from the audience during the live Q&A but were not able to address them all during the webinar. Below, we’ve included answers to the questions from our presenters Brian Morris (Google) and Prakash Chauhan (Meta) that we didn’t get to during the live webinar.

Q: Why did you choose 3 DIMMs per channel configuration instead of a factor of 2? Does unbalanced loading on the CXL controller affect the maximum DDR4 speeds it can achieve?

[Prakash]: Increased loading due to 3DPC does mean reduced operating speeds but this does not significantly affect the performance of the system due to smart placement of cold pages in the CXL attached memory.

[Brian]: This also gives us 50% more capacity to amortize the CXL silicon cost, which is a big benefit.

Q: Taking a latency hit when going to the CXL tier stalls the CPU core for 100s of nanoseconds to the microsecond range when compression+encryption is applied in the IO path. Is this tolerable for compute in hyperscale environments?

[Prakash]: It is yet to be seen if there are exposed latencies that are as high as microseconds. We have established some latency guidelines even when compressed memory is accessed and the use of caching can mitigate latency for subsequent accesses to the same page. The intent is to reduce the occurrence of hits to the slowest tiers of memory so that the impact is small.

[Brian]: The mantra for computer architecture is: Make the common case fast. The corollary is that it is okay for the uncommon case to be slow. If you’re going to CXL for every read request your performance is going to have problems. If you add a few hundred nanoseconds going to memory once every couple of minutes you aren’t going to notice. From a latency QoS point of view this looks similar to getting stuck behind a refresh for native attach DDR5.

Q: What is the ratio of CXL memory to host DRAM needed to balance the performance and latency characteristics? Linux has weighted interleave added to the latest kernel to address this. Also, does your implementation have a kernel version dependency?

[Prakash]: The ratio is workload dependent, but as published in papers by Meta and Google across multiple workloads, there is a significant percentage of cold memory that can be moved to CXL. There are several ongoing efforts in Linux to support tiered memory and for CXL support in general, so a specific version is not something that we can call out.

[Brian]: At a minimum you need enough host memory to provide full bandwidth (use all available DDR channels to maximize native DDR bandwidth). Beyond that I agree that it is workload dependent.

Q: Given the improved CPU performance relative to memory costs, could we achieve a better performance-to-cost ratio by using different compression schemes tailored to the data nature on the host, rather than relying on less effective inline compression on the device?

[Brian]: One of the challenges with doing compression on the host is latency added from using a page fault to initiate the software flow. If you’re willing to change your workload to do the compression & decompression then that could work, but it will require a lot of enabling. Incidentally, there is nothing that precludes using a general purpose core to do fancy compression on the CXL device.

Q: Since CXL writes are 64-byte accesses, it doesn’t seem like you’d get much reduction by doing inline compression of each 64-byte write. Are you aggregating multiple 64-byte writes in some way before performing the compression?

[Prakash]: While CXL transactions are cache line granular, most hotness tracking, allocation and migration between tiers happens at a page granularity. This may or may not be the compression block granularity. If it were, then a cache line write to a compressed page would require the page to be first decompressed then cached and the cache line updated in the cache. Any further writes that are temporally adjacent would happen in the cached region until the page needs to be evicted from the cache to make room for something else.

Q: With the CXL controller, the reuse of RDIMM still needs to build a new memory board similar to the motherboard. Are the “software-defined memory” companies building applications to reuse older server motherboards more efficient?

[Brian]: I haven’t seen anything like this

[Prakash]: I haven’t either and without symmetric CXL.mem support, these solutions would have to be software-based schemes using RDMA etc, so not necessarily a high performance, low latency, load store memory.

Q: How does the memory controller identify cold data vs hot data?

[Prakash]: Hotness monitoring can be done in software (by scanning the page access bits) or in hardware (by tracking which pages were accessed recently/repeatedly).

Q: Is pooling using MLD really needed? If not, how can you establish memory pooling at the rack level?

[Prakash]: Our talk did not necessarily cover pooling. It requires either MLD with switches or MH-SLD (single device exposed per CXL link with multiple CXL ports to connect to multiple CPUs).

Q: When do you see PCI Express® (PCIe®) Gen 6 as a requirement? Are you primarily considering x8 or are you also considering an x16 interface?

[Prakash]: For the controller we specified, we requested 4 ch, 3DPC, Gen5 x16 for DDR4 and 4ch, 2DPC, Gen6 x16 for DDR5. This is roughly to balance out the CXL bandwidth to the DDR bandwidth.

[Brian]: There is an interesting side-effect of compression that it decouples the bandwidth of the media from the bandwidth of the link. If you have great locality and great compressibility, you’re going to want a faster link because you’re satisfying most of your requests from the block cache. If you have a poor locality then Gen5 is probably enough bandwidth.

Q: Do you see any fundamental issues that are inhibiting CXL memory adoption by hyperscalers?

[Prakash]: The main issues are:

- keeping costs lower than non-CXL memory

- manageability/serviceability similar to natively attached DDR

- software stack to deal with slower tiers without causing application performance to worsen.

These issues are not show-stoppers, it just takes time to make sure that everything works.

[Brian]: It’s all about value. If the solution provides significant value, we’ll figure out how to work through the issues. The solution we outline here provides significant value.